FlyEM Hemibrain Release

Bill Katz

Senior Engineer @ Janelia Research CampusWelcome to the opening of the dvid.io website and the release of the Hemibrain dataset!

For the first blog post, I'll oddly tell you all the ways you can access the dataset without DVID, and end the post with how you can setup your own DVID installation. The full Hemibrain DVID dataset is composed of the grayscale image volume (34431 x 39743 x 41407 voxels kept in the cloud) with approximately another two terabytes of local data generated as part of the reconstruction process. (See Data Management in Connectomics for a blog post on how we manage data.)

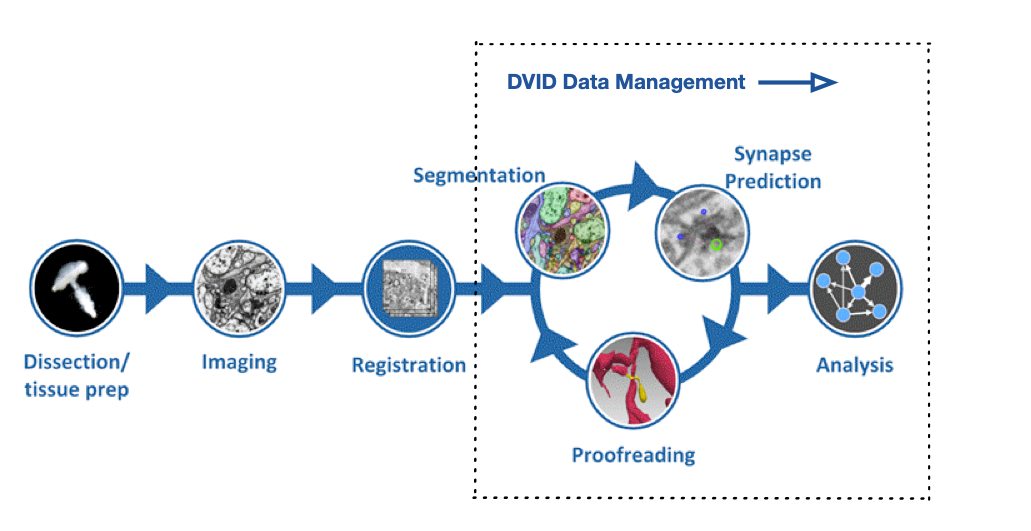

DVID was the central database for 50+ users, managing many versions of data throughout the connectome construction including:

- segmentation: supervoxel identifiers per voxel and their mapping to neuron body IDs

- regions of interest (ROIs) like the Mushroom Body

- meshes (ROIs, supervoxels, and neurons)

- neuron skeletons

- synapses and info to rapidly get synapse counts for any body

- cell/label information

- proofreader assignments for various protocols

- bookmarks (3d point annotations)

- various classifications of volume like mitochondria

- miscellaneous data stored in versioned files

Here's where DVID fits into the reconstruction workflow:

There's a lot of data and much of it may be irrelevant to a biologist trying to understand the Connectome of the Adult Drosophila Central Brain. For our research at Janelia, DVID ideally fades into the background and most of what users see are connectomics-focused applications like neuPrint, NeuTu, and Neuroglancer, which is actually embedded in other apps as well. All these connectomics-focused apps use DVID as a backend although Neuroglancer can use a number of backends and can be optimized for particular versions of data (like the Hemibrain dataset snapshot at its release) via its "precomputed" storage format.

Let's briefly cover the various ways you can download or interact with the newly released data.

neuPrint

The first obvious stop is the neuPrint Hemibrain website. It's a nice web interface to query and visualize the released connectomics data without having to download anything locally.

Downloads

From the 26+ TB of data, we can generate a compact (25 MB) data model containing the adjacency matrix. We annotate brain region information for each connection to make the model richer.

You can download all the data injected into neuPrint (excluding the 3D data and skeletons) in CSV format.

The skeletons of the 21,663 traced neurons are available as a tar file. Included is a CSV

file traced-neurons.csv listing the instance and type of each traced body ID.

Neuroglancer

Our great collaborators at Google have not only produced exceptional automatic neuron segmentation to guide our proofreading, but the Neuroglancer web app has become a fixture in the connectomics community. Jeremy Maitin-Shepard has enhanced his Neuroglancer tool for this Hemibrain data release. Here's a link to viewing the dataset directly in your browser.

The hemibrain EM data and proofread reconstruction is available at the

Google Cloud Storage bucket gs://neuroglancer-janelia-flyem-hemibrain

in the Neuroglancer precomputed

format

for interactive visualization with

Neuroglancer and

programmatic access using libraries like

Cloudvolume (see below).

You can also download the data directly using the Google gsutil tool (use the -m option, e.g., gsutil -m cp -r gs://bucket mydir

for bulk transfers).

Available data:

- EM data

- Original aligned stack (at original 8x8x8nm resolution, as well as downsamplings)

gs://neuroglancer-janelia-flyem-hemibrain/emdata/raw/jpegJPEG format - CLAHE applied over YZ cross sections (at original 8x8x8nm resolution, as well as downsamplings)

gs://neuroglancer-janelia-flyem-hemibrain/emdata/clahe_yz/jpegJPEG format

- Original aligned stack (at original 8x8x8nm resolution, as well as downsamplings)

- Segmentation

- Volumetric segmentation labels (at original 8x8x8nm resolution, as well as downsamplings)

gs://neuroglancer-janelia-flyem-hemibrain/v1.0/segmentationNeuroglancer compressed segmentation format

- Volumetric segmentation labels (at original 8x8x8nm resolution, as well as downsamplings)

- ROIs

- Volumetric ROI labels (at 16x16x16nm resolution, as well as downsamplings)

gs://neuroglancer-janelia-flyem-hemibrain/v1.0/roisNeuroglancer compressed segmentation format

- Volumetric ROI labels (at 16x16x16nm resolution, as well as downsamplings)

- Tissue type classifications

- Volumetric labels (at original 16x16x16nm resolution, as well as downsamplings)

gs://neuroglancer-janelia-flyem-hemibrain/mask_normalized_round6Neuroglancer compressed segmentation format

- Volumetric labels (at original 16x16x16nm resolution, as well as downsamplings)

- Mitochondria detections

- Volumetric labels (at original 16x16x16nm resolution, as well as downsamplings)

gs://neuroglancer-janelia-flyem-hemibrain/mito_20190717.27250582Neuroglancer compressed segmentation format

- Volumetric labels (at original 16x16x16nm resolution, as well as downsamplings)

- Synapse detections

- Indexed spatially and by pre-synaptic and post-synaptic cell id

gs://neuroglancer-janelia-flyem-hemibrain/v1.0/synapsesNeuroglancer annotation format

- Indexed spatially and by pre-synaptic and post-synaptic cell id

Tensorstore (Added 2020-04-03)

Google just released a new library for efficiently reading and writing large multi-dimensional arrays. At this time, there are C++ and python APIs. An example of reading the hemibrain segmentation is in the Tensorstore documentation.

CloudVolume

The Seung Lab's CloudVolume python client allows you to programmatically access Neuroglancer Precomputed data. CloudVolume currently handles Precomputed images (sharded and unsharded), skeletons (sharded and unsharded) and meshes (unsharded legacy format only). It doesn't handle annotations at the moment, which are usually handled by whatever proofreading system a given lab uses.

NeuTu

NeuTu is a proofreading workhorse for the FlyEM team. It allows users to observe segmentation and to split or merge bodies if necessary. It also permits annotation, ROI creation, and many other features. Please visit this NeuTu documentation page for how to setup DVID with the Hemibrain dataset as described below.

Full Datasets with DVID

For those users who want to download and forge ahead on their own copy of our reconstruction data, you can download a replica of our production DVID system and the full Hemibrain databases.

<Clarification added 2020-03-24> You can quickly download a relatively small DVID executable which then allows access to grayscale data stored in the cloud, both in JPEG and raw format. All other data can be downloaded by type (e.g., synapses, ROIs, segmentation, etc.) so you can choose what you need.

Please refer to the Hemibrain DVID release page for download information.

We'll be updating the documentation on this website over time.

Please drop us a note

if you are running your own fork so we can keep you apprised of continuing work, documentation,

and opportunities to push back your changes to the public server.